Improving URL Security with Identicons

If you’ve followed the recent discussion about Chrome’s changes to displaying URLs in the address bar, you might want to directly switch to the second part of this blog post.

Existing Problems with URLs

With version 69, Google introduced a new feature for Chrome that strips subdomains of URLs in their address bar if Chrome considers them to be “trivial”. For example, when you enter the URL https://www.google.com, only google.com will be visible in Chrome’s address bar. The scheme part of the URL will also be hidden, only indicating whether you’re on HTTPS or not by a “Secure” or “Insecure” badge. But “www” is not the only subdomain that is considered trivial. The subdomain “m”, which is often used to serve a mobile version of a website, is also hidden. This way, https://en.m.wikipedia.org becomes en.wikipedia.org.

This change enraged many people on Hacker News and, while some defended the decision, many called it an attack to the Domain Name System and standards.

One obvious reason for Google to strip down URLs in the address bar is to reduce horizontal space on mobile devices. Another reason to change this is to make URL usage more secure for end users. In recent years, many browsers started to highlight the hostname of URLs in the address bar by displaying the rest of the URL in gray color. Safari even goes a step further and only displays the root-level domain of a URL in the address bar. All of this was to make it obvious on which website users are.

In a recent story on Wired titled “Google Wants to Kill the URL,” an engineering manager on the Google Chrome team, Adrienne Porter Felt, is cited as follows:

“People have a really hard time understanding URLs. They are hard to read, it’s hard to know which part of them is supposed to be trusted, and in general I don’t think URLs are working as a good way to convey site identity. So we want to move toward a place where web identity is understandable by everyone – they know who they’re talking to when they’re using a website and they can reason about whether they can trust them. But this will mean big changes in how and when Chrome displays URLs. We want to challenge how URLs should be displayed and question it as we’re figuring out the right way to convey identity.”

Personally, I have problems with the mindset of the inexperienced user. Of course there are many that have no idea how the world wide web and underlying technologies work and they have a right to safely and easily navigate the web without thinking about URLs. But on the other hand, URLs are an essential pillar of the web and the internet, along with HTTP, HTML and many other open standards, that are controlled by the IETF or W3C. Hiding the technical details that drive the web from its users is making it easier for some that struggle to use technology in general, but it also keeps people from learning how the web works, because they don’t get in touch with the web’s rough edges. While it might be in Google’s interest to blur the boundaries of a search on Google and concrete web page, it can’t be good for the openness of the web if Google expands its position as a gatekeeper. Other of its initiatives, like Accelerated Mobile Pages (AMP) go in a similar direction.

So let’s come back to one of the good reasons for changing how users experience and interact with URLs: the security aspect. In order to know if you’re on the website you want to be, you need to know which part of the URL is relevant for security. And even if you know that the use of HTTPS and the hostname (domain) are relevant, hostnames can be difficult to tell from each other, since they can contain Unicode characters through the use of Punycode (RFC 3492). The problem here is that there are so many homoglyphs in Unicode that look essentially the same as other characters.

For instance, a malicious entity could register the domain аⅿаzоn.com, which, depending on the font used to render this article, can’t be distinguished from amazon.com. This way, users could be tricked into entering their credentials on the malicious website. This problem isn’t really tackled by highlighting the domain. In fact, browser vendors started to block requests to websites that use glyphs from different ranges of Unicode.

A new approach

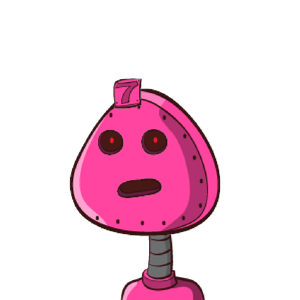

When I started to think about these problems, I immediately thought of identicons. Identicons are often used by forum or commenting software to distinguish multiple authors of posts when they didn’t upload an avatar to their account. The principle behind identicons is simple: calculate a hash of some string of text and render an image with the use of multiple, pre-defined geometric figures. Add some color and your identicon is ready. Identicons were invented by Don Park in 2007 “as an easy means of visually distinguishing multiple units of information, anything that can be reduced to bits. It’s not just IPs but also people, places and things.” These are two examples of identicons I generated using Don Park’s original implementation.

One valid issue with identicons was identified by Colin Davis, who created the service Robohash four years later and commented on Hacker News:

“Identicons are a great idea, I really love them.. They’re a good solution to a gut-check”Something is wrong here.."

Sort of like a SSH-fingerprint.

The problem I’ve had with them is that they’re generate not all that memorable. Was that triangles pointing left, then up, or up then left?

[Robohash] is my attempt at addressing that problem for my own new project […]”

So my idea was to use identicons or similar for displaying a small and identifying icon next to an URL in the address bar of browsers in order to easily identify phishing attacks on your most important web services. These would need to be rendered by the browser directly without any possibility for website owners to change the appearance.

For example, here are the robohash images for amazon.com and its scam version next to it:

Easily distinguishable, right?

Remembering identicons for a small number of websites would be enough to safely navigate the web. If an identicon looked suspicious, you’d simply not enter your personal information on that website.

To make this work securely across the vast number of domains on the web is a little difficult, though. Depending on the algorithm used, most types of identicons can only have up to hundreds of millions of combinations. This is certainly not enough to be applied globally and there also will certainly be images that look very much like others. If two similar-looking identicons also had similar-lookin domains, this could get really dangerous. So to improve this, we would need to use state of the art hash algorithms like SHA-3 and also use all resulting bits to change a feature of the identicon. In order to make this possible, multiple identicon types could be combined or new variations of existing types could be created.

I probably should start to develop a browser extension that implements this idea and look how this idea works in practice. If you think this idea would be worth giving a try, feel free to go ahead and implement something yourself!

What do you think about this idea? Let me know in the comments or on Hacker News!